A world of machine learning applications opens up when industrial networks include the Jetson™ Xavier system from NVIDIA®. The Xavier is based on the successful Tegra system and it is the most powerful system in the NVIDIA® Jetson™ family. The performance of the Xavier’s 8-core ARM processor can be matched by many systems, the source of excitement lies with the 512-core Volta GPU with 64 Tensor Cores. This transforms this compact module into a number-crunching monster. It can be used to detect patterns in signals or objects in images — operations requiring high volume matrix manipulations. This kind of computation performance is not normally available to PLCs making the Xavier the perfect complimentary system.

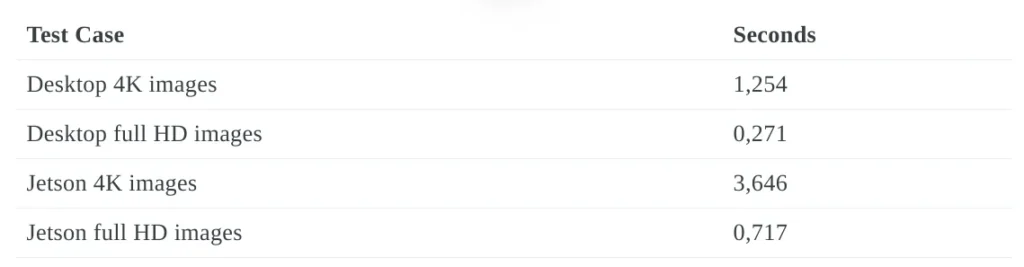

Our benchmark application uses a combination of image processing and machine learning to solve a bin-picking problem. The image processing part uses OpenCV to identify the outside edges of the parts. The inference utilises a retrained faster R-CNN inception model. The full hd images could be processed in just under 0.8 seconds — a little more than twice the time required by a desktop computer equipped with a GTX1080Ti graphics card. Even more surprising considering the Jetson’s maximum power consumption of 30 Watts compared with the energy-hungry components of a desktop.

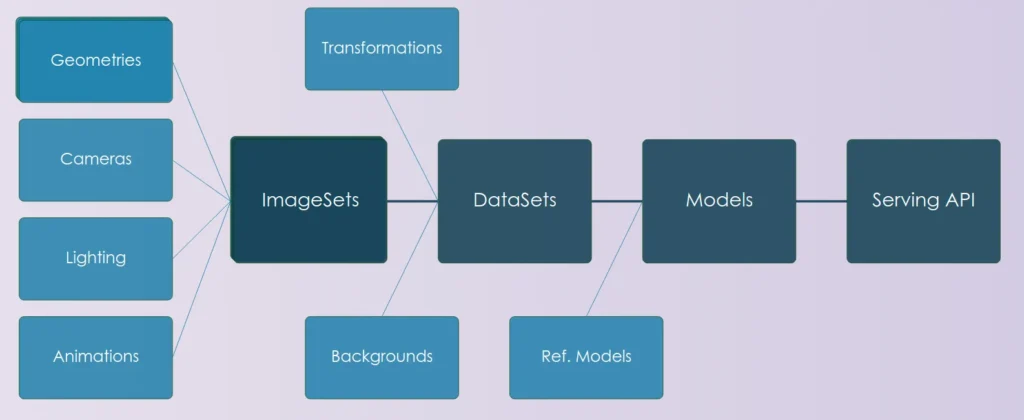

Choosing the right path for the integration of such systems depends very much on the application. The possibilities include using an MQTT broker, OPC UA or a simple socket-based communication. We tested the MQTT communication with a Beckhoff system and the OPC UA interface with a Siemens S7–1200 PLC. The benchmark application described here used the latter.

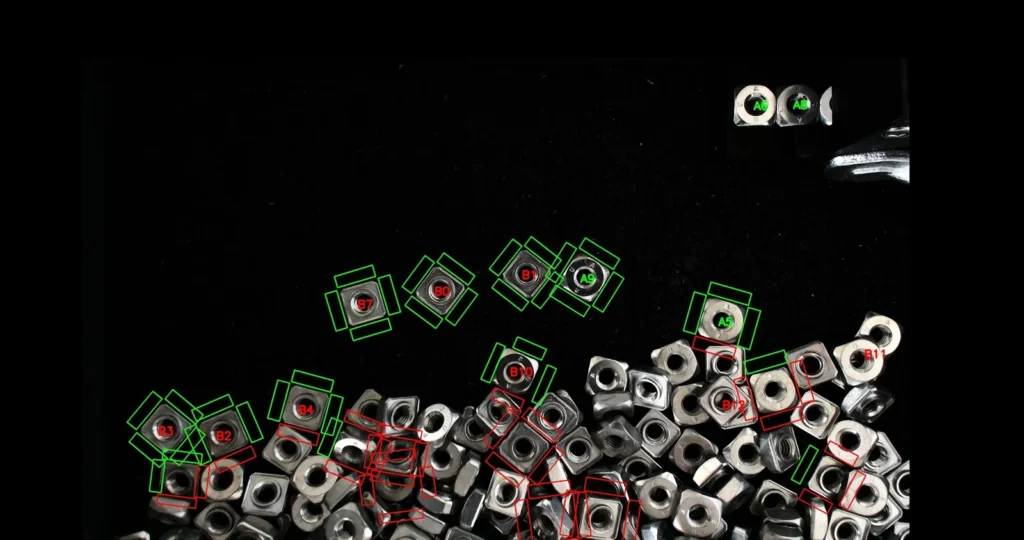

Output image of the benchmark application:

The benchmark application required the following functionality:

- Updating a full hd image from a USB webcam at 10Hz — up to 60Hz is possible

- Periodically updating the robot position allowing interpretation of the images in robot coordinates (only necessary as the webcam was mounted on the robot arm)

- Masking the input image to blend out the edges of the box which sometimes showed reflections of the parts

- Using inference to detect the A and B sides of the nuts (parts lying on the B sides had to be flipped)

- Using OpenCV to accurately detect the edges of isolated nuts

- Using OpenCV to determine if the nut can be gripped without the gripper colliding with the surrounding nuts

- Passing an array of potential targets with coordinates to the PLC via OPC UA

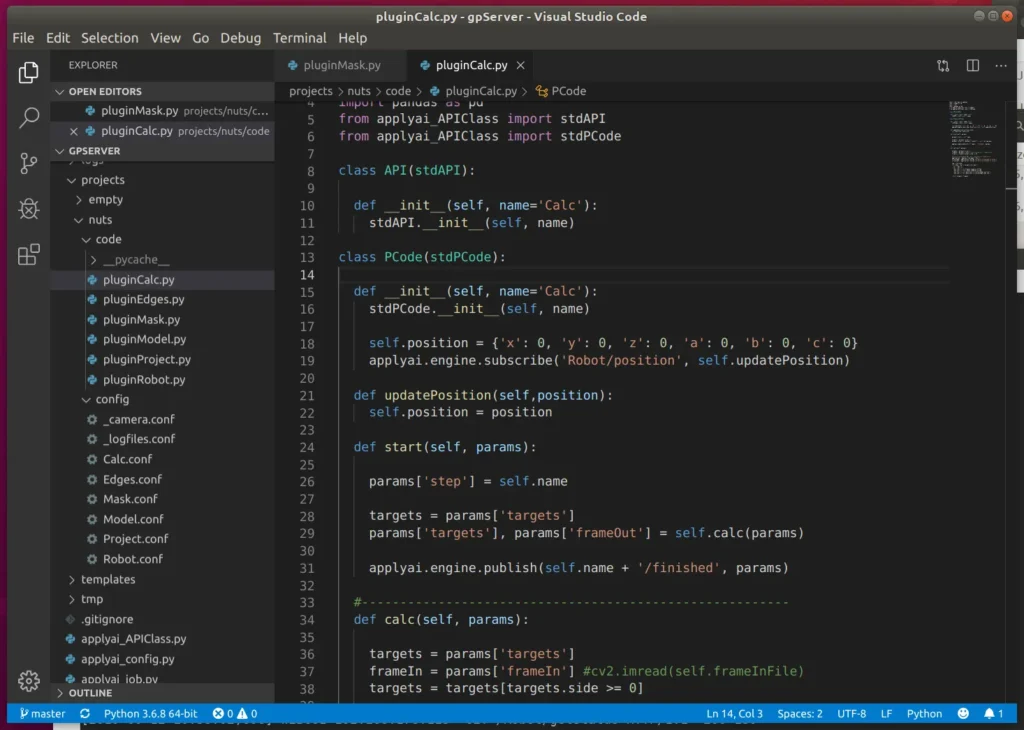

The above functionality was orchestrated by a lightweight application server. The server included an HTTP server allowing access to all configuration data, logs and images. The functions were implemented as plugins each providing access via a standard API which included input and output images and an array of potential targets.

Some may argue that the complete application could have been realised using OpenCV and many similar applications do. This may have even proved to be the faster solution, however, the advantages of using inference in this application were substantial:

- The models replaced a large amount of application-specific code with data generated using a standardised process — the training consumed images automatically generated from imported geometry data of the nut.

- The models used for inference proved to be robust against changes in light surpassing previous experience with coded solutions.